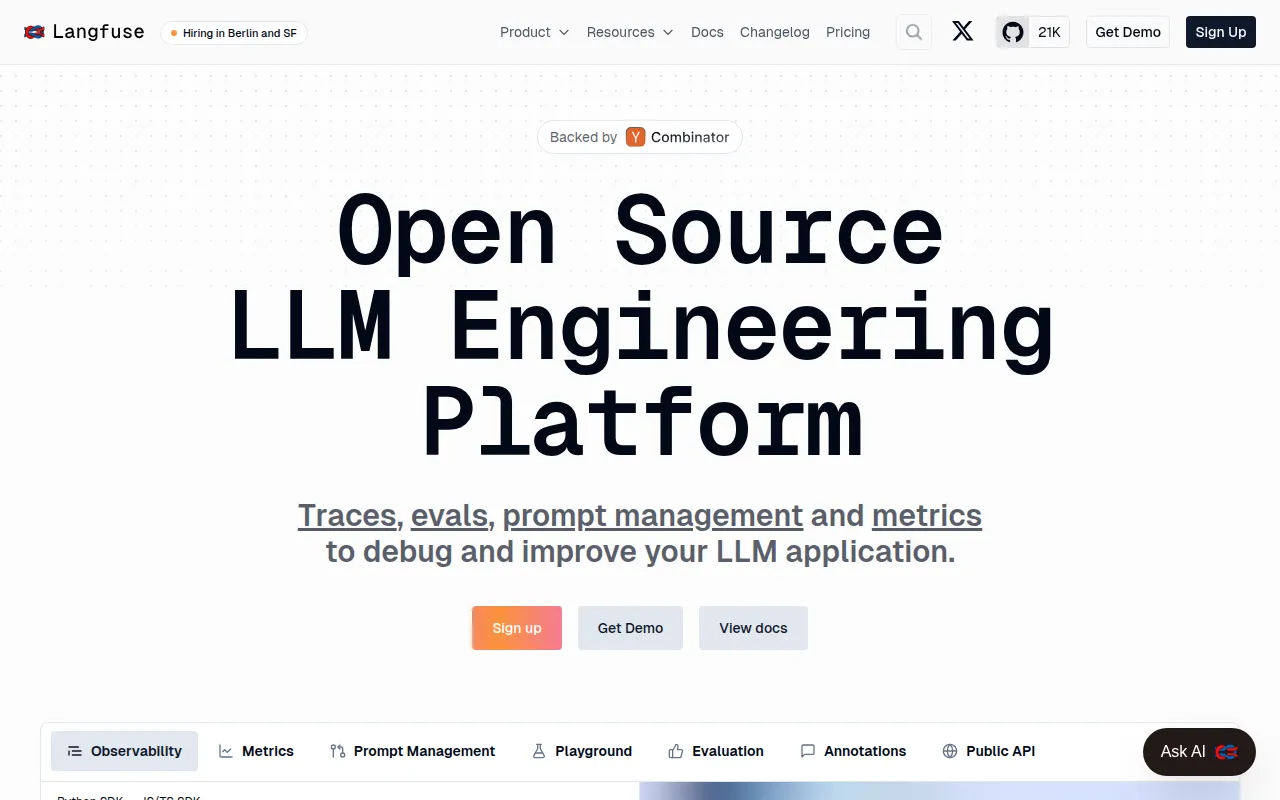

What is Langfuse

Langfuse is an open-source LLM engineering platform designed to optimize AI interactions. It provides tools for tracking, analyzing, and managing conversations, prompts, and evaluations to help debug and improve Large Language Model (LLM) applications.

How to use Langfuse

Langfuse can be integrated into your LLM application using its SDKs. For example, the Python SDK allows you to use the @observe decorator on functions to automatically link nested calls and trace LLM interactions. The langfuse.openai module provides a wrapper for OpenAI's API, enabling seamless integration with their chat completions.

Features of Langfuse

- Observability: Captures complete traces of LLM applications and agents.

- Traces: Inspect failures and build evaluation datasets.

- Evaluations: Tools for assessing LLM performance.

- Prompt Management: Manage and optimize prompts.

- Metrics: Track key performance indicators for LLM applications.

- SDKs: Available for Python and JavaScript/TypeScript.

- Integrations: Supports various LLM providers (e.g., OpenAI), frameworks (e.g., Langchain, LlamaIndex), and gateways (e.g., LiteLLM).

- Playground: An environment for experimenting with LLM interactions.

- Annotations: Facilitates human annotation of LLM outputs.

- Public API: Allows programmatic access to Langfuse features.

Use Cases of Langfuse

- Debugging and improving LLM applications by analyzing conversation traces.

- Building evaluation datasets from observed LLM interactions.

- Managing and versioning prompts used in LLM applications.

- Monitoring and analyzing the performance of LLM applications through metrics.

Pricing

Langfuse offers a cloud-based application with a sign-up option. Specific pricing details are not provided in the content.