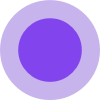

What is ltx-2

LTX-2 is the first production-ready, open-source AI model designed for synchronized 4K video and audio generation. It allows users to create up to 20-second video clips at 50 frames per second with native audio generation. The model is built on a 19 billion parameter DiT (Diffusion Transformer) architecture and is optimized for NVIDIA hardware.

How to use ltx-2

- Access the Interactive Demo: Experience LTX-2's capabilities directly in your browser via the provided interactive demo.

- Utilize GitHub Repository: For local deployment and customization, access the LTX-2 GitHub repository.

- Integrate via API: LTX-2 can be accessed through platforms like Fal, Replicate, and RunDiffusion, or you can deploy your own API using the official inference code.

- Fine-tuning: The model supports LoRA fine-tuning for custom styles, motion patterns, or subject likenesses. Training code and documentation are available in the repository.

Features of ltx-2

- 4K Resolution at 50 FPS: Generates native 4K (2160p) video at up to 50 frames per second.

- Synchronized Audio Generation: Creates perfectly synchronized audio, including dialogue, ambient sounds, and music, in a single pass.

- Text & Image to Video: Transforms text prompts or static images into dynamic video content.

- Up to 20 Second Clips: Generates extended video clips with temporal coherence.

- NVIDIA Optimized: Offers 3x faster performance with NVFP4 format on RTX 50 Series GPUs and 40% VRAM reduction with optimized weights.

- Fully Open-Source: Provides complete access to model weights, training code, and inference pipeline under the Apache 2.0 license.

- Local Execution: Enables local deployment and custom fine-tuning.

Use Cases of ltx-2

- Content Creation: Stock footage generation, social media content, marketing videos, product demonstrations, video templates.

- Film & Animation: Pre-visualization, VFX reference footage, concept development, storyboard animation, background generation.

- Education & Training: Instructional videos, simulation content, educational materials, training scenarios, e-learning resources.

- Research & Development: AI research experiments, computer vision training data, model development, synthetic data generation, academic projects.

FAQ

What makes LTX-2 different from other AI video models? LTX-2 is the first fully open-source model with synchronized audio and video generation, supporting true 4K resolution at up to 50 FPS. It offers complete access to weights, training code, and inference pipeline under the Apache 2.0 license, enabling local execution and custom fine-tuning.

What hardware do I need to run LTX-2? Recommended: NVIDIA RTX 40/50 series GPU with 16GB+ VRAM, Python 3.10+, CUDA 12.7+. The model can run on RTX 5090 (4K generation in 3 minutes) or RTX 4090. Optimized versions support FP8/FP4 formats for reduced memory usage. Cloud deployment options are also available.

Is LTX-2 really free to use? Yes, LTX-2 is fully open-source under Apache 2.0 license. You can use it freely for commercial and non-commercial purposes, modify the code, and fine-tune the model. All weights, training code, and documentation are publicly available on GitHub.

How long does it take to generate a video? Performance varies by hardware: RTX 5090 generates 4K 10-second videos in approximately 3 minutes (vs 15 min without optimization), 720p 4-second clips in approximately 25 seconds. With NVFP4 format, generation is 3x faster. RTX 40 series and cloud GPUs offer different performance profiles.

Can I fine-tune LTX-2 on my own data? Yes, LTX-2 supports LoRA fine-tuning for custom styles, motion patterns, or subject likenesses. Training can be completed in under an hour on capable hardware. The repository includes training code and documentation for customization.

What video formats and resolutions are supported? LTX-2 outputs MP4 format videos. Supported resolutions range from 720p to 4K (2160p UHD). Frame rates up to 50 FPS are supported. Input dimensions must be multiples of 32 for width/height and 8+1 for frames for optimal results.

How does audio generation work? LTX-2 generates synchronized audio alongside video in a single inference pass. This includes ambient sounds, music, and effects. For best results with dialogue, use explicit speech cues in prompts. Audio quality is optimized when generation includes sound-related context.

Is there a cloud API available? Yes, LTX-2 can be accessed through various platforms including Fal, Replicate, and RunDiffusion. You can also deploy your own API using the official inference code. Self-hosted options give you complete control and privacy.

What are the current limitations? LTX-2 may inherit biases from training data and might not perfectly match all complex prompts. Audio quality can decrease without explicit speech cues. High resource demands exist for unoptimized runs. The model is designed for creative generation, not factual information. Users should apply appropriate safeguards for production use.

How can I contribute to the project? Contributions are welcome on GitHub! You can submit bug reports, feature requests, code improvements, or documentation updates. Join the community discussions, share your generated content, and help improve the model. Check the official repository for contribution guidelines.